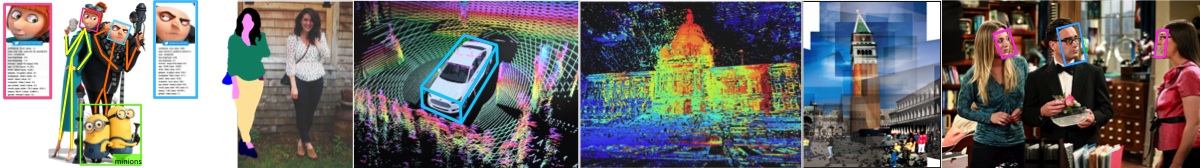

CSC 420: Introduction to Image Understanding

Undergraduate CourseFall 2015

Everyone has large photo collections these days. How can you intelligently find all pictures in which your dog appears? How can you find all pictures in which you are frowning? Can we make cars smart, e.g., can the car drive you to school while you finish your last homework? How can a home robot understand the environment, e.g., switch on a tv when being told so and serve you dinner? If you take a few pictures of your living room, can you reconstruct it in 3D (which allows you to render it from any new viewpoint and thus allows you to create a "virtual tour" of your room)? Can you reconstruct it from one image alone? How can you efficiently browse your home movie collection, e.g. find all shots in which Tom Cruise is chasing a bad guy?

Course Overview

Prerequisites: A second year course in data structures (e.g., CSC263H), first year calculus (e.g., MAT135Y), and linear algebra (e.g., MAT223H) are required. Students who have not taken CSC320H will be expected to do some extra reading (e.g., on image gradients). Matlab will extensively used in the programming excercises, so any prior exposure to it is a plus (but not a requirement).

Course Information

-

Time and Location

Fall 2015

Day: Tuesday and Thursday Time: 3pm-4pm Room: BA1200 Tutorials Room: BA1200Instructor

Sanja Fidler

Email: fidler@cs... Homepage: http://www.cs.toronto.edu/~fidler Office hours: Tue at 1.40-2.50pm

Fri at 11am-noon, or by

appointment (send email)

Information Sheet

The information sheet for the class is available here.

Programming Language(s)

You are expected to do some programming assignments for the class. You can code in either Matlab, Python or C. However, in class we will provide the examples and functions in Matlab. Note also that most Computer Vision code online is in Matlab so it's useful to learn it. Knowing C is only a plus since you can interface your C code to Matlab via "mex".

Please make sure you have access to MATLAB with the Image Processing Toolbox installed.

-

Useful tutorials:

- Matlab tutorial written by Prof. Stefan Roth

- Python tutorial written especially for this class by Olessia Karpova who took CSC420 last year. Thank you Olessia!

Forum

This class uses piazza. On this webpage, we will post announcements and assignments. The students will also be able to post questions and discussions in a forum style manner, either to their instructors or to their peers.

Please sign up here in the beginning of class.

Invited Speakers

We will have several speakers presenting in the course, including robotics, vision and ML professors, as well as last year's CSC420 students that are now doing Computer Vision in grad school.

- Oct 29: Tim Barfoot, Associate Professor, Autonomous Space Robotics Laboratory, UofT AbstractSlidesVideos

- Nov (TBA): Raquel Urtasun, Assistant Professor in the Machine Learning group at UofT

- Nov 12: Alexander Schwing, Postdoctoral Fellow in the Machine Learning group at UofT

Abstract:

In this talk I will describe a particular approach to visual route following for mobile robots that we have developed, called Visual Teach & Repeat (VT&R), and what I think the next steps are to make this system usable in real-world applications. We can think of VT&R as a simple form of simultaneous localization and mapping (without the loop closures) along with a path-tracking controller; the idea is to pilot a robot manually along a route once and then be able to repeat the route (in its own tracks) autonomously many, many times using only visual feedback. VT&R is useful for such applications as load delivery (mining), sample return (space exploration), and perimeter patrol (security). Despite having demonstrated this technique for over 500 km of driving on several different robots, there are still many challenges we must meet before we can say this technique is ready for real-world applications. These include (i) visual scene changes such as lighting, (ii) physical scene changes such as path obstructions, and (iii) vehicle changes such as tire wear. I'll discuss our progress to date in addressing these issues and the next steps moving forward. There will be lots of videos.

Dr. Timothy Barfoot (Associate Professor, University of Toronto Institute for Aerospace Studies -- UTIAS) holds the Canada Research Chair (Tier II) in Autonomous Space Robotics and works in the area of guidance, navigation, and control of mobile robots for space and terrestrial applications. He is interested in developing methods to allow mobile robots to operate over long periods of time in large-scale, unstructured, three-dimensional environments, using rich onboard sensing (e.g., cameras and laser rangefinders) and computation. Dr. Barfoot took up his position at UTIAS in May 2007, after spending four years at MDA Space Missions, where he developed autonomous vehicle navigation technologies for both planetary rovers and terrestrial applications such as underground mining. He is an Ontario Early Researcher Award holder and a licensed Professional Engineer in the Province of Ontario. He sits on the editorial boards of the International Journal of Robotics Research and the Journal of Field Robotics. He recently served as the General Chair of Field and Service Robotics (FSR) 2015, which was held in Toronto.

PhD Students:

- Oct 1: Daphne Ippolito, PhD student at UPenn

- Oct 22: Mian Wei, PhD student at UofT

Textbook

We will not directly follow any textbook, however, we will require some reading in the textbook below. Additional readings and material will be posted in the schedule table as well as the resources section.

-

Website

"Computer Vision: Algorithms and Applications"

Richard Szeliski

Springer, 2010

The textbook is freely available online and provides a great resource for introduction to computer vision.

We will be reading the Sept 3, 2010 version.

Requirements and Grading

Each student is expected to complete five assignments which will be in the form of problem sets and programming problems, and complete a project.

Assignments

Assignments will be given every two weeks. They will consist of problem sets and programming problems with the goal of deepening your understanding of the material covered in class. All solutions and programming should be done individually. There will be five assignments altogether, each worth 12% of the final grade.

Submission: Solutions to the assignments should be submitted through CDF. The preferred format is PDF, but we will also accept Word. Unless stated otherwise in the Assignments' instructions include the code (for exercises that ask for code) within the solution document. An ideal example of how the code can be included can be found here. We also don't mind if you print-screen your matlab functions and include the pictures as long as they are of good quality to be read. If you are using Matlab's built-in functions within your code you should not include them. But include all your code.

Deadline: The solutions to the assignments should be submitted by 11.59pm on the date they are due. Anything from 1 minute late to 24 hours will count as one late day.

Lateness policy: Each student will be given a total of 3 free late days. This means that you can hand in three of your assignments one day late, or one assignment three days late. It is up to you to make a good planning of your work. After you have used your 3 day budget, your late assignments will not be accepted.

Plagiarism: We take plagiarism very seriously. Everything you hand in to be marked, namely assignments and projects, must represent your own work. Read How not to plagiarize.

Project

Each student will be given a topic for the project. You will be able to choose from a list of projects, or propose your own project which will need to be discussed and approved by your instructor. You will need to hand in a report which will count 25% of your grade. Each student will also need to present and be capable to defend his/her work. The presentation will count 15% of the grade.

Assignments | 60%(5 assignments, each worth 12%) |

Project | 40% (report: 25%, presentation: 15%) |

Syllabus

The course will cover image formation, feature representation and detection, object and scene recognition and learning, multi-view geometry and video processing. Since Kinect is popular these days, we will also try to squeeze recognition with RGB-D data into the schedule.

| Image Processing |

|---|

Linear filters |

Edge detection |

| Features and matching |

Keypoint detection |

Local descriptors |

Matching |

Low-level and Mid-level grouping |

Segmentation |

Region proposals |

Hough voting |

Recognition |

Face detection and recognition |

Object recognition |

Object detection |

Part-based models |

Image labeling |

Geometry |

Image formation |

Stereo |

Multi-view reconstruction |

Kinect |

Video processing |

Motion |

Action recognition |

Schedule

| Date | Topic | Reading | Slides | Additional material | Assignments |

|---|---|---|---|---|---|

| Sept 15 | Course Introduction | lecture1.pdf | |||

| Image Processing | |||||

| Sept 17 | Linear Filters | Szeliski book, Ch 3.2 | lecture2.pdf | code: finding Waldo, smoothing, convolution | |

| Sept 22 | Edge Detection | Szeliski book, Ch 4.2 | lecture3.pdf | code: edges with Gaussian derivatives | Assignment 1: due Oct 3, 11.59pm, 2015 |

| Sept 24 | Edge Detection cont. | Szeliski book, Ch 4.2 | lecture4.pdf | ||

| Sept 29 | Image Pyramids | Szeliski book, Ch 3.5 | lecture5.pdf | ||

| Features and Matching | |||||

| Oct 1 | Keypoint Detection: Harris Corner Detector | Szeliski book, Ch 4.1.1 pages: 209-215 | lecture6.pdf | ||

| Oct 6 | Keypoint Detection: Scale Invariant Keypoints | Szeliski book, Ch 4.1.1 pages: 216-222 | lecture7.pdf | ||

| Oct 8 | Keypoint Detection: Scale Invariant Keypoints | lecture7 continued | Assignment 2: due Oct 18, 11.59pm, 2015 | ||

| Oct 13 | Local Descriptors: SIFT, Matching | Szeliski book, Ch 4.1.2 Lowe's SIFT paper | lecture8.pdf | code: compiled SIFT code, VLFeat's SIFT code | |

| Oct 15 | Robust Matching, Homographies | Szeliski book, Ch 6.1 | lecture9.pdf | code: Soccer and screen homography | |

| Geometry | |||||

| Oct 20 | Camera Models | Szeliski, 2.1.5, pp. 46-54Zisserman & Hartley, 153-158 | lecture10.pdf (hi-res version) | ||

| Oct 22 | Camera Models | lecture10 continued | Assignment 3: due Nov 6, 11.59pm, 2015 | ||

| Oct 27 | Homography revisited | lecture11.pdf (hi-res version) | Projects: due Dec 4, 11.59pm, 2015 | ||

| Oct 29 | Stereo: Parallel Optics | lecture12.pdf (hi-res version) | code: Yamaguchi et al. | ||

| Nov 3 | Stereo: General Case | Szeliski book, Ch. 11.1 Zisserman & Hartley, 239-261 | lecture13.pdf (hi-res version) | ||

| Recognition | |||||

| Nov 5 | Recognition: Overview | Grauman & Leibe, Visual Object Recognition | lecture14.pdf (hi-res version) | Assignment 4: due Nov 17, 11.59pm, 2015 | |

| Nov 12 | All You Wanted To Know About Neural Networks | Invited lecture: Alex Schwing | lecture15.pdf | Sara Sabour's tutorial on NNs (for Python) | |

| Nov 17 | Fast Retrieval | Sivic & Zisserman, Video Google | lecture16.pdf (hi-res version) | ||

| Nov 19 | Implicit Shape Model | B. Leibe et al., Robust Object Detection with Interleaved Categorization and Segmentation | lecture17.pdf (hi-res version) | Yukun Zhu's tutorial on caffe and classification with NNs | Assignment 5: due Nov 28, 11.59pm, 2015 |

| Nov 24 | The HOG Detector | HOG paper | lecture18.pdf (hi-res version) | Jialiang Wang's Tutorial on classification (HOG+SVM) | |

| Nov 26 | Deformable Part-based Model | DPM paper | lecture19.pdf (hi-res version) | ||

| Dec 1 | Segmentation | SLIC, Felzenswalb & Huttenlocher | lecture20.pdf (hi-res version) | ||

Feedback About Class

Whether you are enrolled in the class or just casually browsing the webpage, please leave feedback about the class / material. You can do it here. Thanks!

If you want to hear about a particular topic that is not planned in the regular course, please post it here.

Assignment Solutions: Hall of Fame

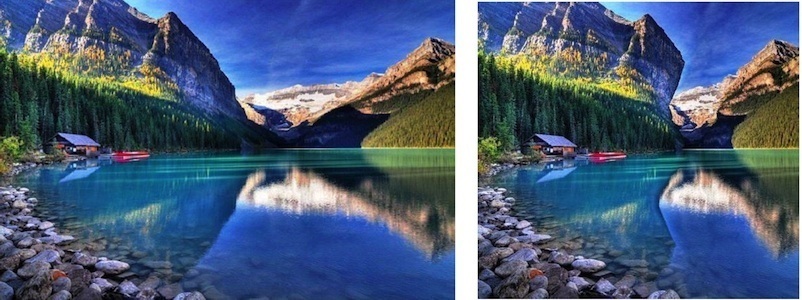

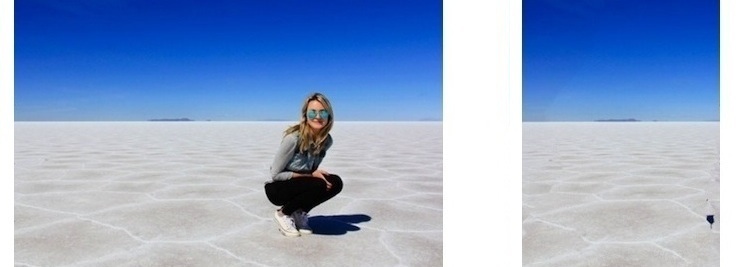

Assignment 1: Finding City Skylines and Seam Carving

The exercise was to remove horizontal and/or vertical seams, i.e. paths with the smallest sum of gradients. We followed Avidan and Shamir's "Seam Carving for Content-Aware Image Resizing" paper.

The second exercise was to trace a skyline of a city. Most solutions used the seam carving approach to do this, while one of the solutions try to segment out the sky.

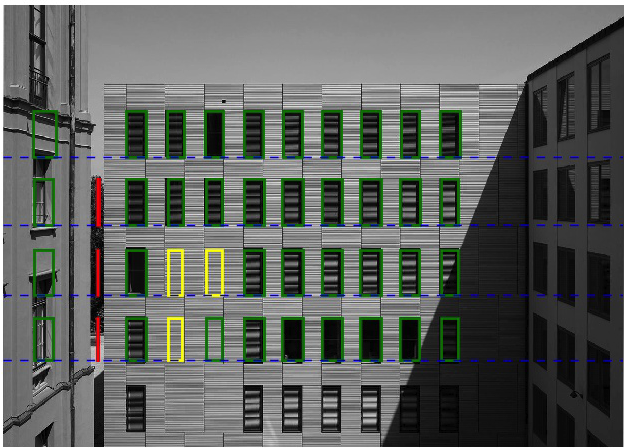

Assignment 2: Window Detection

The exercise was to detect all frontal windows in a given image. Even more extra credit was given to solutions that also detected floors of each building. We got some really great solutions!

Resources

- Forsyth and Ponce, Computer Vision: A Modern Approach

- Richard Hartley and Andrew Zisserman, Multiple View Geometry in Computer Vision

- Kristen Grauman and Bastian Leibe, short book on Visual Object Recognition

- Christopher M. Bishop, Pattern Recognition and Machine Learning

- Tom Mitchell, Machine Learning

- Short tutorial on getting started with Matlab

- CV online: wiki with computer vision concepts

- Li Fei-Fei, Rob Fergus, Antonio Torralba, Tutorial on Recognizing and Learning Object Categories

- Andrew Moore, Tutorial on Support Vector Machines

- Sebastian Nowozin, Christoph H. Lampert, Structured Learning and Prediction in Computer Vision (advanced Machine Learning Tutorial)

- Tutorial on writing fast Matlab code

- OpenCV library: open source computer vision library (c++)

- VLfeat: open source computer vision library (Matlab, c)

- LIBSVM: A Library for Support Vector Machines (Matlab, Python)

- Structured Edge Detection Toolbox: Very fast edge detector (up to 60 fps) with high accuracy

- Object detection code with Deformable Part-based Models

- Caffe: Deep learning features for image classification

- Bundler: Structure from Motion (SfM) for Unordered Image Collections

- SLIC superpixels: Very fast superpixel code

- UCM superpixels: Accurate superpixels

- Selective Search: code for computing bottom-up region proposals

- PASCAL VOC: Object recognition dataset

- KITTI: Autonomous driving dataset

- Reconstruction Meets Recognition Challenge (RMRC): Indoor recognition with RGB-D data

- LabelMe: Open image annotation tool

- ImageNet: Large-scale object dataset

- ReKognition API: Demo for face recognition and detection, image classification

- Photosynth: View the world in 3D

- Google goggles app

- Clothing parser demo by Tamara Berg's group

- A list of online computer vision demos

- The Computer Vision Industry, a list of CV companies maintained by David Lowe