Topics in Computer Vision (CSC2539):

Visual Recognition with Text

Winter 2017

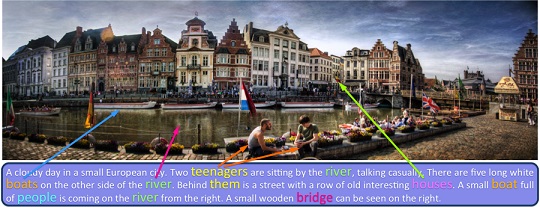

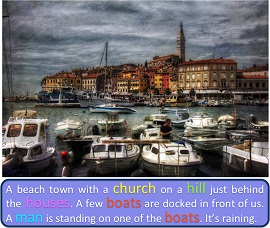

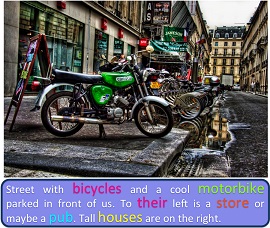

Images do not appear in isolation. For example, on the web images are typically surrounded by informative text in the form of tags (e.g., on Flickr), captions (short summaries conveying something about the picture), and blogs/news articles, etc. In robotics, language is the most convenient way to teach an autonomous agent novel concepts or to communicate the mistakes it is making. For example, when providing a novel task to a robot, such as "pass me the stapler", we could provide additional information, e.g., "it is next to the beer bottle on the table". This information could be used to greatly simplify the parsing task. Conversely, it also crucial that the agent communicates its understanding of the scene to the human, e.g., "I can't, I am watching tv on a sofa, next to the wine bottle."

Course overview

This class is a graduate seminar course in computer vision. The class will focus on the topic of visual recognition by exploiting textual information. We will discuss various problems and applications in this domain, and survey the current papers on the topic of images/videos and text. The goal of the class will be to understand the cross-domain approaches, to analyze their strengths and weaknesses, as well as to identify interesting new directions for future research.

Prerequisites: Courses in computer vision and/or machine learning are highly recommended (otherwise you will need some additional reading), and basic programming skills are required for projects.

Course Information

-

Instructor

Sanja Fidler

Email: fidler@cs... Homepage: http://www.cs.toronto.edu/~fidler Office hours: by appointment (send email)

Forum

This class uses piazza. On this webpage, we will post announcements and assignments. The students will also be able to post questions and discussions in a forum style manner, either to their instructors or to their peers.

Please sign up here in the beginning of class.

Invited Lectures

- William Tunstall-Pedoe, British entrepreneur: Lecture on Question-Answering

- Frank Rudzicz, Scientist at Toronto Rehabilitation Institute, Assistant Professor at University of Toronto: Lecture on Natural Language Processing

- Jamie Kiros, PhD student, University of Toronto: Lecture on Fun with Vision and Language

- Makarand Tapaswi, Postdoctoral fellow, University of Toronto: Lecture on Story Understanding

- Kaustav Kundu, PhD student, University of Toronto: Lecture on Datasets and metrics for image captioning

Requirements

Each student will need to write two paper reviews each week, present once or twice in class (depending on enrollment), participate in class discussions, and complete a project (done individually or in pairs).

| The final grade will consist of the following | |

|---|---|

Participation (attendance, participation in discussions, reviews) | 15% |

Presentation (presentation of papers in class) | 25% |

Project (proposal, final report) | 60% |

Detailed Requirements (click to Expand / Collapse)

Paper reviewing

Every week (except for the first two) we will read 2 to 3 papers. The success of the discussion in class will thus be due to how prepared the students come to class. Each student is expected to read all the papers that will be discussed and write two detailed reviews about the selected two papers. Depending on enrollment, each student will need to also present a paper in class. When you present, you do not need to hand in the review.

Deadline: The reviews will be due one day before the class.

| Structure of the review |

|---|

Short summary of the paper |

Main contributions |

Positive and negatives points |

How strong is the evaluation? |

Possible directions for future work |

Presentation

Depending on enrollment, each student will need to present a few papers in class. The presentation should be clear and practiced and the student should read the assigned paper and related work in enough detail to be able to lead a discussion and answer questions. Extra credit will be given to students who also prepare a simple experimental demo highlighting how the method works in practice.

A presentation should be roughly 20 minutes long (please time it beforehand so that you do not go overtime). Typically this is about 15 to 20 slides. You are allowed to take some material from presentations on the web as long as you cite the source fairly. In the presentation, also provide the citation to the paper you present and to any other related work you reference.

Deadline: The presentation should be handed in one day before the class (or before if you want feedback).

| Structure of presentation: |

|---|

High-level overview with contributions |

Main motivation |

Clear statement of the problem |

Overview of the technical approach |

Strengths/weaknesses of the approach |

Overview of the experimental evaluation |

Strengths/weaknesses of evaluation |

Discussion: future direction, links to other work |

Project

Each student will need to write a short project proposal in the beginning of the class (in January). The projects will be research oriented. In the middle of semester course you will need to hand in a progress report. One week prior to the end of the class the final project report will need to be handed in and presented in the last lecture of the class (April). This will be a short, roughly 15-20 min, presentation.

The students can work on projects individually or in pairs. The project can be an interesting topic that the student comes up with himself/herself or with the help of the instructor. The grade will depend on the ideas, how well you present them in the report, how well you position your work in the related literature, how thorough are your experiments and how thoughtful are your conclusions.

Syllabus

We will first survey a few current methods on visual object recognition and scene understanding, as well as basic Natural Language Processing. The main focus of the course will be on vision and how to exploit natural language to learn visual concepts, improve visual parsing, do retrieval, as well as lingual description generation.

Tentative Syllabus (click to Expand / Collapse)

| Image/Video understanding | |

|---|---|

object recognition | |

image labeling | |

scene understanding | Natural Language Processing |

parsing, part-of-speech tagging | |

coreference resolution | Vision and Language |

captioning | |

retrieval | |

learning visual models from text | |

question-answering | |

visual-text alignment | |

zero-shot recognition | |

image generation (from text) | |

visual explanations | |

visual word-sense disambiguation |

Schedule

| Date | Topic | Reading | Presenter(s) | Slides | |

|---|---|---|---|---|---|

| Jan 11 | Intro | Jamie Kiros (invited lecture) | |||

| Jan 18 | Basics and Popular Topics in NLP | Frank Rudzicz (invited lecture) | |||

| Readings on Images/Videos and Text | |||||

| Jan 25 | Image Captioning: Datasets and metrics | Kaustav Kundu | slides | ||

| Image Captioning | Unifying Visual-Semantic Embeddings with Multimodal Neural Language Models [PDF] R. Kiros, R. Salakhutdinov, R. S. Zemel | David Madras | slides | ||

| Feb 1 | Image Captioning | Show, Attend and Tell: Neural Image Caption Generation with Visual Attention [PDF] Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhutdinov, Richard Zemel, Yoshua Bengio | Katherine Ge | slides | |

| Paragraph Generation | A Hierarchical Approach for Generating Descriptive Image Paragraphs [PDF] Jonathan Krause, Justin Johnson, Ranjay Krishna, Li Fei-Fei | Tianyang Liu | slides | ||

| Feb 8 | Dialog Systems | Visual Dialog [PDF] Abhishek Das, Satwik Kottur, Khushi Gupta, Avi Singh, Deshraj Yadav, Jose M. F. Moura, Devi Parikh, Dhruv Batra | Sayyed Nezhadi | slides | |

| Domain adaptation | Learning Aligned Cross-Modal Representations from Weakly Aligned Data [PDF] L. Castrejon, Y. Aytar, C. Vondrick, H. Pirsiavash, A. Torralba | Lluis Castrejon (invited) | slides | ||

| Feb 14 | Question-Answering | Question-Answering in Industry | William Tunstall-Pedoe (invited talk) | ||

| Feb 15 | Question-Answering | Ask Me Anything: Free-form Visual Question Answering Based on Knowledge from External Sources [PDF] Qi Wu, Peng Wang, Chunhua Shen, Anthony Dick, Anton van den Hengel | Paul Vicol | slides | |

| Retrieval | Order-Embeddings of Images and Language [PDF] Ivan Vendrov, Ryan Kiros, Sanja Fidler, Raquel Urtasun | Aryan Arbabi | slides | ||

| Mar 1 | Understanding Diagrams | A Diagram Is Worth A Dozen Images [PDF] Aniruddha Kembhavi, Mike Salvato, Eric Kolve, Minjoon Seo, Hannaneh Hajishirzi, Ali Farhadi | Ramin Zaviehgard | slides | |

| 0-shot Learning | Predicting Deep Zero-Shot Convolutional Neural Networks using Textual Descriptions [PDF] Jimmy Ba, Kevin Swersky, Sanja Fidler, Ruslan Salakhutdinov | Fartash Faghri | slides | ||

Resources

Tutorials, related courses:

- Introduction to Neural Networks, CSC321 course at University of Toronto

- Course on Convolutional Neural Networks, CS231n course at Stanford University

- Deep Learning for Natural Language Processing, CS224d course at Stanford University

- Course on Probabilistic Graphical Models, CSC412 course at University of Toronto, advanced machine learning course

Software:

- Caffe: Deep learning for image classification

- Tensorflow: Open Source Software Library for Machine Intelligence (good software for deep learning)

- Theano: Deep learning library

- mxnet: Deep Learning library

- Torch: Scientific computing framework with wide support for machine learning algorithms

- LIBSVM: A Library for Support Vector Machines (Matlab, Python)

- scikit: Machine learning in Python

Datasets:

- Microsoft Coco: Large-scale image recognition, segmentation, and captioning dataset

- Flickr30K: Image captioning dataset

- Flickr30K Entities: Flick30K with phrase-to-region correspondences

- Abstract scenes: Clipart dataset with captions, fill-in-the-blank

- MovieDescription: a dataset for automatic description of movie clips

- Text-to-image coreference: multi-sentence descriptions of RGB-D scenes, annotations for image-to-text and text-to-text coreference

- VQA: Visual question answering dataset

- DAQUAR: Visual question answering with RGB-D images

- Madlibs: Visual Madlibs (fill-the-blank)

- MovieQA: a dataset for question-answering about stories

- BookCorpus: a corpus of 11,000 books

- Write a Classifier: a dataset of Wikipedia articles about birds

Online demos:

- Lots of cool Toronto Deep Learning Demos: image classification and captioning demos

- Lots of cool demos for ConvNets by Andrej Karpathy

- Reinforcement Learning with Neural Nets (read paper for more info)

- Places: scene classification with neural nets

- CRF as RNN: Semantic Image Segmentation

- drawNet: visualization of ConvNet activations

- Visualization of ConvNets for digit classification

Main conferences:

- NIPS (Neural Information Processing Systems)

- ICML (International Conference on Machine Learning)

- ICLR (International Conference on Learning Representations)

- AISTATS (International Conference on Artificial Intelligence and Statistics)

- CVPR (IEEE Conference on Computer Vision and Pattern Recognition)

- ICCV (International Conference on Computer Vision)

- ECCV (European Conference on Computer Vision)

- ACL (Association for Computational Linguistics)

- EMNLP (Conference on Empirical Methods in Natural Language Processing)