Topics in Computer Vision (CSC2523):

Visual Recognition with Text

Winter 2015

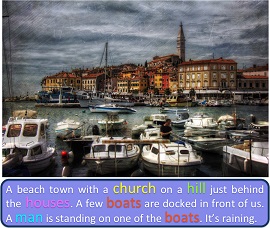

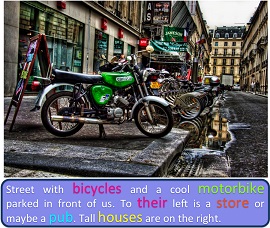

Images do not appear in isolation. For example, on the web images are typically surrounded by informative text in the form of tags (e.g., on Flickr), captions (short summaries conveying something about the picture), and blogs/news articles, etc. In robotics, language is the most convenient way to teach an autonomous agent novel concepts or to communicate the mistakes it is making. For example, when providing a novel task to a robot, such as "pass me the stapler", we could provide additional information, e.g., "it is next to the beer bottle on the table". This information could be used to greatly simplify the parsing task. Conversely, it also crucial that the agent communicates its understanding of the scene to the human, e.g., "I can't, I am watching tv on a sofa, next to the wine bottle."

Course overview

This class is a graduate seminar course in computer vision. The class will focus on the topic of visual recognition by exploiting textual information. We will discuss various problems and applications in this domain, and survey the current papers on the topic of images/videos and text. The goal of the class will be to understand the cross-domain approaches, to analyze their strengths and weaknesses, as well as to identify interesting new directions for future research.

Prerequisites: Courses in computer vision and/or machine learning (e.g., CSC320, CSC420) are highly recommended (otherwise you will need some additional reading), and basic programming skills are required for projects.

Course Information

-

Time and Location

Winter 2015

Day: Wednesday Time: 11am-1pm Room: HA 410 (Haultain Bldg)Instructor

Sanja Fidler

Email: fidler@cs... Homepage: http://www.cs.toronto.edu/~fidler Office hours: by appointment (send email)

Forum

This class uses piazza. On this webpage, we will post announcements and assignments. The students will also be able to post questions and discussions in a forum style manner, either to their instructors or to their peers.

Please sign up here in the beginning of class.

Research Outcome

We are happy to announce that four projects for this class has been published in top conferences:

Aligning Books and Movies: Towards Story-like Visual Explanations by Watching Movies and Reading BooksYukun Zhu*, Ryan Kiros*, Richard Zemel, Ruslan Salakhutdinov, Raquel Urtasun, Antonio Torralba, Sanja Fidler In International Conference on Computer Vision (ICCV), Santiago, Chile, 2015 * Denotes equal contribution @inproceedings{ZhuICCV15, Books are a rich source of both fine-grained information, how a character, an object or a scene looks like, as well as high-level semantics, what someone is thinking, feeling and how these states evolve through a story. This paper aims to align books to their movie releases in order to provide rich descriptive explanations for visual content that go semantically far beyond the captions available in current datasets. To align movies and books we exploit a neural sentence embedding that is trained in an unsupervised way from a large corpus of books, as well as a video-text neural embedding for computing similarities between movie clips and sentences in the book. We propose a context-aware CNN to combine information from multiple sources. We demonstrate good quantitative performance for movie/book alignment and show several qualitative examples that showcase the diversity of tasks our model can be used for.

Lost Shopping! Monocular Localization in Large Indoor SpacesShenlong Wang, Sanja Fidler, Raquel Urtasun In International Conference on Computer Vision (ICCV), Santiago, Chile, 2015 @inproceedings{WangICCV15, In this paper we propose a novel approach to localization in very large indoor spaces (i.e., 200+ store shopping malls) that takes a single image and a floor plan of the environment as input. We formulate the localization problem as inference in a Markov random field, which jointly reasons about text detection (localizing shop's names in the image with precise bounding boxes), shop facade segmentation, as well as camera's rotation and translation within the entire shopping mall. The power of our approach is that it does not use any prior information about appearance and instead exploits text detections corresponding to the shop names. This makes our method applicable to a variety of domains and robust to store appearance variation across countries, seasons, and illumination conditions. We demonstrate the performance of our approach in a new dataset we collected of two very large shopping malls, and show the power of holistic reasoning.

Predicting Deep Zero-Shot Convolutional Neural Networks using Textual DescriptionsJimmy Ba, Kevin Swersky, Sanja Fidler, Ruslan Salakhutdinov In International Conference on Computer Vision (ICCV), Santiago, Chile, 2015 @inproceedings{BaICCV15, One of the main challenges in Zero-Shot Learning of visual categories is gathering semantic attributes to accompany images. Recent work has shown that learning from textual descriptions, such as Wikipedia articles, avoids the problem of having to explicitly define these attributes. We present a new model that can classify unseen categories from their textual description. Specifically, we use text features to predict the output weights of both the convolutional and the fully connected layers in a deep convolutional neural network (CNN). We take advantage of the architecture of CNNs and learn features at different layers, rather than just learning an embedding space for both modalities, as is common with existing approaches. The proposed model also allows us to automatically generate a list of pseudo- attributes for each visual category consisting of words from Wikipedia articles. We train our models end-to-end us- ing the Caltech-UCSD bird and flower datasets and evaluate both ROC and Precision-Recall curves. Our empirical results show that the proposed model significantly outperforms previous methods.

Skip-Thought VectorsRyan Kiros, Yukun Zhu, Ruslan Salakhutdinov, Richard Zemel, Antonio Torralba, Raquel Urtasun, Sanja Fidler Neural Information Processing Systems (NIPS), Montreal, Canada, 2015 @inproceedings{KirosNIPS15, We describe an approach for unsupervised learning of a generic, distributed sentence encoder. Using the continuity of text from books, we train an encoder-decoder model that tries to reconstruct the surrounding sentences of an encoded passage. Sentences that share semantic and syntactic properties are thus mapped to similar vector representations. We next introduce a simple vocabulary expansion method to encode words that were not seen as part of training, allowing us to expand our vocabulary to a million words. After training our model, we extract and evaluate our vectors with linear models on 8 tasks: semantic relatedness, paraphrase detection, image-sentence ranking, question-type classification and 4 benchmark sentiment and subjectivity datasets. The end result is an off-the-shelf encoder that can produce highly generic sentence representations that are robust and perform well in practice. We will make our encoder publicly available. |

Requirements

Each student will need to write two paper reviews each week, present once or twice in class (depending on enrollment), participate in class discussions, and complete a project (done individually or in pairs).

Grading (click to Expand / Collapse)

| The final grade will consist of the following | |

|---|---|

Participation (attendance, participation in discussions, reviews) | 25% |

Presentation (presentation of papers in class) | 35% |

Project (proposal, final report) | 40% |

Detailed Requirements (click to Expand / Collapse)

Paper reviewing

Every week (except for the first two) we will read 2 to 3 papers. The success of the discussion in class will thus be due to how prepared the students come to class. Each student is expected to read all the papers that will be discussed and write two detailed reviews about the selected two papers. Depending on enrollment, each student will need to also present a paper in class. When you present, you do not need to hand in the review.

Deadline: The reviews will be due one day before the class.

| Structure of the review |

|---|

Short summary of the paper |

Main contributions |

Positive and negatives points |

How strong is the evaluation? |

Possible directions for future work |

Presentation

Depending on enrollment, each student will need to present a few papers in class. The presentation should be clear and practiced and the student should read the assigned paper and related work in enough detail to be able to lead a discussion and answer questions. Extra credit will be given to students who also prepare a simple experimental demo highlighting how the method works in practice.

A presentation should be roughly 20 minutes long (please time it beforehand so that you do not go overtime). Typically this is about 15 to 20 slides. You are allowed to take some material from presentations on the web as long as you cite the source fairly. In the presentation, also provide the citation to the paper you present and to any other related work you reference.

Deadline: The presentation should be handed in one day before the class (or before if you want feedback).

| Structure of presentation: |

|---|

High-level overview with contributions |

Main motivation |

Clear statement of the problem |

Overview of the technical approach |

Strengths/weaknesses of the approach |

Overview of the experimental evaluation |

Strengths/weaknesses of evaluation |

Discussion: future direction, links to other work |

Project

Each student will need to write a short project proposal in the beginning of the class (in January). The projects will be research oriented. In the middle of semester course you will need to hand in a progress report. One week prior to the end of the class the final project report will need to be handed in and presented in the last lecture of the class (April). This will be a short, roughly 15-20 min, presentation.

The students can work on projects individually or in pairs. The project can be an interesting topic that the student comes up with himself/herself or with the help of the instructor. The grade will depend on the ideas, how well you present them in the report, how well you position your work in the related literature, how thorough are your experiments and how thoughful are your conclusions.

Here is a list of possible topics for the projects.

Syllabus

We will first survey a few current methods on visual object recognition and scene understanding, as well as basic Natural Language Processing. The main focus of the course will be on vision and how to exploit natural language to learn visual concepts, improve visual parsing, do retrieval, as well as lingual description generation.

| Visual recognition fundamentals | |

|---|---|

object recognition | |

image labeling | |

scene understanding | Natural Language Processing |

parsing, part-of-speech tagging | |

coreference resolution | Images/videos and text |

image tags | |

visual word-sense disambiguation | |

retrieval with complex lingual queries | |

visual concept grounding | |

description generation |

Schedule

We will have a guest lecture by Dr. Mohit Bansal, TTI-Chicago, on popular NLP topics and state-of-the-art techniques.

| Date | Topic | Reading | Presenter(s) | Slides | ||

|---|---|---|---|---|---|---|

| Basics in Image Understanding and Natural Language Processing | ||||||

| Jan 14 | Course Intro & Overview of Computer Vision | Sanja Fidler | intro lecture rec. lecture |

|||

| Jan 21 | Basics and Popular Topics in NLP | Mohit Bansal (invited lecture) | slides | |||

| Readings on Images/Videos and Text | ||||||

| Jan 28 | Description generation | Unifying Visual-Semantic Embeddings with Multimodal Neural Language Models [PDF] R. Kiros, R. Salakhutdinov, R. S. Zemel | Ryan Kiros | |||

| Description generation | Deep Visual-Semantic Alignments for Generating Image Descriptions [PDF] A. Karpathy, L. Fei-Fei | Ivan Vendrov | slides | |||

| Feb 4 | Datasets and metrics | Kaustav Kundu | slides | |||

| Description generation | Every Picture Tells a Story: Generating Sentences for Images [PDF] A. Farhadi, M. Hejrati, M. A. Sadeghi, P. Young, C. Rashtchian, J. Hockenmaier, D. A. Forsyth | Yukun Zhu | slides | |||

| Feb 11 | Description generation (video) | Translating Video Content to Natural Language Descriptions [PDF] M. Rohrbach, W. Qiu, I. Titov, S. Thater, M. Pinkal, B. Schiele | Patricia Thaine | slides | ||

| Image generation (from text) | Learning the Visual Interpretation of Sentences [PDF] C. L. Zitnick, D. Parikh, L. Vanderwende | Shenlong Wang | slides | |||

| Feb 18 | Reading week (no class) | |||||

| Feb 25 | Learning visual models from text | Connecting Modalities: Semi-supervised Segmentation and Annotation of Images Using Unaligned Text Corpora [PDF] R. Socher, L. Fei-Fei | Jake Snell | slides | ||

| Learning visual models from text | Beyond Nouns: Exploiting Prepositions and Comparative Adjectives for Learning Visual Classifiers [PDF] A. Gupta, L. S. Davis | Arvid Frydenlund | slides | |||

| March 4 | Description generation | Show, Attend and Tell: Neural Image Caption Generation with Visual Attention [PDF] K. Xu, J. Ba, R. Kiros, K. Cho, A. Courville, R. Salakhutdinov, R. Zemel, Y. Bengio | Jimmy Ba | |||

| Learning visual models from web | Inferring the Why in Images [PDF] H. Pirsiavash, C. Vondrick, A. Torralba | Micha Livne | slides | |||

| March 11 | Zero-shot visual learning via text | Write a Classifier: Zero-Shot Learning Using Purely Textual Descriptions [PDF] M. Elhoseiny, B. Saleh, A. Elgammal | Jimmy Ba | |||

| Learning visual models from the web | Learning Everything about Anything: Webly-Supervised Visual Concept Learning [PDF] S. K. Divvala, A. Farhadi, C. Guestrin | Patricia Thaine | slides | |||

| March 18 | Word-sense disambiguation | Unsupervised Learning of Visual Sense Models for Polysemous Words [PDF] K. Saenko, T. Darrell | Kamyar Seyed Ghasemipour | |||

| Question and answering | A Multi-World Approach to Question Answering about Real-World Scenes based on Uncertain Input [PDF] M. Malinowski, M. Fritz | Ivan Vendrov | slides | |||

| March 25 | Learning visual models from text and video | Joint person naming in videos and coreference resolution in text [PDF] V. Ramanathan, A. Joulin, P. Liang, L. Fei-Fei | Yukun Zhu | slides | ||

| Visual retrieval via complex lingual queries | Visual Semantic Search: Retrieving Videos via Complex Textual Queries [PDF] D. Lin, S. Fidler, C. Kong, R. Urtasun | Micha Livne | slides | |||

Resources

coming soon